Part 2 of the “’Introduction to Generative AI” series.

Note: Sections of text shown in green italics are prompts that can be entered verbatim in a chat application such as ChatGPT, Bard or other.

For Part 1 click here.

In the first part of our series, we introduced the concept of Generative AI (GenAI). This section provides a comprehensive overview, beginning with the fundamental principles of GenAI and extending to its advanced applications. It includes a historical perspective of Generative AI and a glossary of key terms such as ‘foundation model’ (FM), ‘prompt,’ and ‘completion.’ Moreover, the segment explores various applications of Generative AI, including chatbots, content creation, art, and design, and offers a comparative analysis of several GenAI chatbots like ChatGPT, Microsoft Bing Chat, and Google Bard.

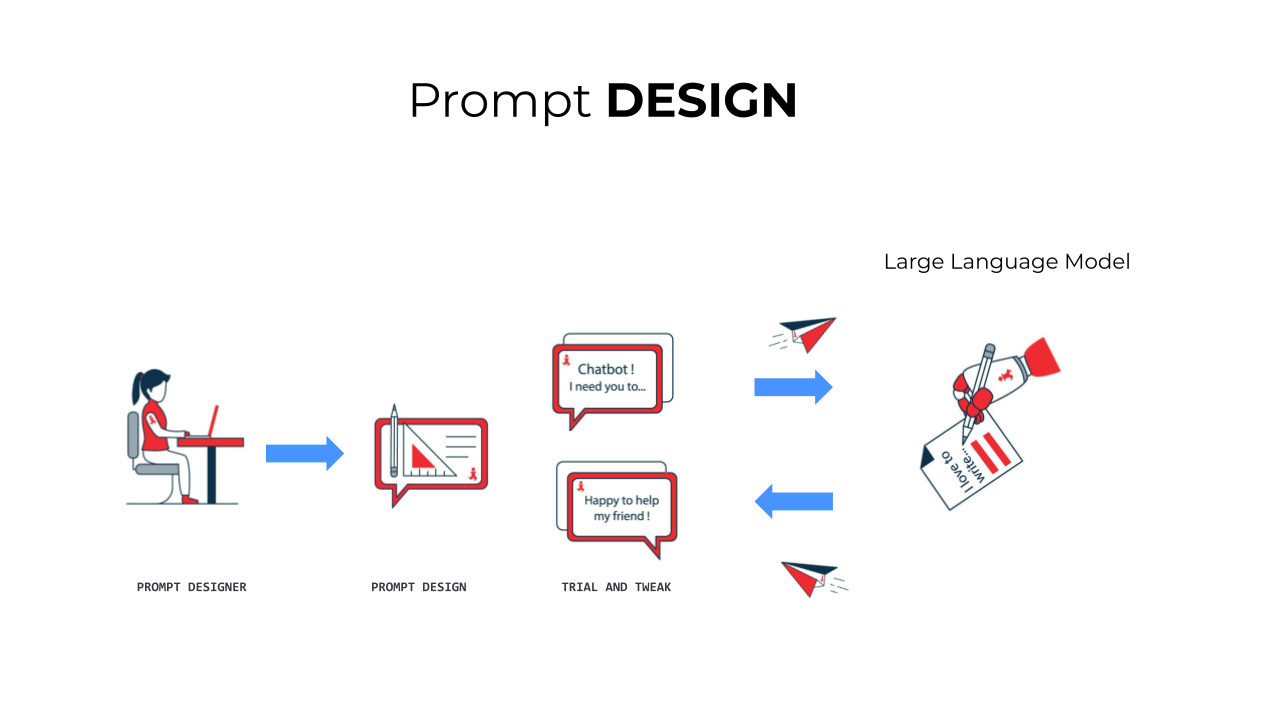

In Part 2, we delve into the techniques of effectively communicating with Large Language Models (LLMs). This process, commonly known as ‘Prompt Design,’ involves crafting clear and precise instructions to achieve desired outcomes. A well-constructed prompt ensures accurate and comprehensive responses, whereas a poorly formulated one might lead to incomplete or erroneous information.

We also examine how LLMs, such as OpenAI’s GPT-3 and GPT-4, have transformed the way we utilize computers as intelligent assistants. The success of these interactions significantly hinges on the ability to skillfully design prompts. This article focuses on the fundamental use cases these systems are designed for. Given the ‘general purpose’ nature of LLMs, which allows them to adapt to a wide array of novel scenarios, it’s challenging to provide an exhaustive list of use cases. However, we present a broad spectrum of categories and examples to illustrate their versatile applications.

Understanding Suitable Use Cases for LLMs:

LLMs – the “engine” behind popular chat services such as ChatGPT and Bard – are versatile, capable of handling a variety of tasks. In contrast other AI models seen prior to LLMs were trained on a specific data set and hence were good at that particular use case and therefore did not do well on a totally new or even an adjacent use case. Example: An AI-based natural language translation service (such as https://translate.google.com) can fairly accurately translate from one language to another but were incapable of responding intelligently if you were to ask it a question about the contents of the translation. LLMs on the other hand can not only perform translation but also question answers and a whole lot more.

The ability of an LLM to perform well in a use case for which it was not previously trained is called “Zero Shot Inference.” Here the “zero shot” means that the particular data was not used in training the LLM yet the model is able to provide a good answer to a given request.

Now let us summarize the broad set of use cases so you as the reader can quickly understand the power of LLMs. In the table below, we provide a table that names a category of use cases that LLMs can satisfy and details about each of those.

| Use Case Category | Detailed Types | How effective are LLMs |

| Content Generation: On prompting, the LLM generates text that satisfies a particular requirement as detailed in the next column. | -Sentence completion -Article authoring -Email response generation -Creative writing or imaginative stories such as a news article, poems, scripts for movies, TV shows, musical pieces and plays -Blog posts -Document summarization -Social media content generation-Marketing material authoring -Letters-Technical documentation -Scientific reports -Product reviews-Essay writing-Speech writing -Terminology definition -Debate points -Paraphrasing or rewording or improving anyone’s written materials. And more. |

Extremely effective. In order to generate great quality and length of content, the prompts need to be well defined. In some cases, multiple prompts need to be provided in sequence in order to achieve the desired results. This sequence of prompts is called Prompt Chaining. |

| Content Summarization: On prompting, the LLM generates a summary of an original text. |

-Condense lengthy documents or texts into concise summaries while preserving key information. |

Extremely effective at single document summarization with a simple prompt. If you want to achieve summarization across documents, say you want multiple reviews to be summarized into one, then that is also possible with moderate effort. |

| Creative Content: On prompting, the LLM creates text that was of a creative nature, a domain previously exclusive to humans in such jobs. | -Generate new ideas, solutions, and approaches to problems. -Company names -Product names -Original music pieces in various genres and styles -Create interactive games, develop engaging storylines, and generate humorous content. |

Extremely effective at generating creative content. The author of this article generated the name Jenz.ai by using a prompt that asked the LLM to come up with names that met his criteria. The name suggestions were then cross referenced in Namecheap for domain name availability. |

| Learning Assistance: On prompting, the LLM can answer questions, provide explanations at different levels of sophistication. | -Providing explanations and educational support. -Adapt to individual needs -Offer tailored educational content. |

Extremely effective. The LLM is capable of providing explanations that are suitable for the right audience. The author used both ChatGPT and Bard in researching many terms and content made available in this website. |

| Technical Assistance: On prompting, the LLM can help solve technical issues, write snippets of code and more. | -Offering help with coding, troubleshooting, and technical advice. |

Reasonably effective. The LLM is capable of providing good answers to technical questions. However, the chance of hallucinations increases where step by step processes are involved. If one step is inaccurate it can lead to a domino effect on further steps. |

| Customer Support: The LLM can answer customer inquiries on the basis of a website or a set of documents. | -Handling inquiries and offering product guidance, responses to customer inquiries and complaints. |

Extremely effective. The LLM is capable of providing good chat and conversational support. It can follow FAQs and other support tickets for knowledge. Beware that plugging into real time data sources will be required for such things as customer details, order information and such. |

| Personal Assistance: The LLM can behave as your agent, autonomously performing several functions previously either done by a human assistant or the person oneself. | -Managing schedules, reminders, and information retrieval. -Booking appointments, and handling daily tasks -Back and forth conversation on any topic of personal interest |

Reasonably effective. The LLM is capable of performing tasks on your behalf at a simpler level at the moment. Specialized LLM-based agents such pi from Inflection.ai. As of this writing pi does a few things but nothing fancy like managing schedules or booking a doctor appointment. |

| Translation: The LLM translates between a wide range of language pairs. | -Translate text from one language to another with high accuracy and fluency. |

Extremely effective. The GPT4 LLM is capable of translating between over 100 languages according to ChatGPT. It covers all of the major languages, so for most users it is a comprehensive solution. |

| Question Answering: The LLM answers general questions or questions that use a specific set of resources for answering those questions. | -Provide comprehensive and informative answers to open-ended, challenging, or strange questions. -Answer questions based on a given website, document or set of resources. |

Reasonably effective. The LLM is capable of answering questions on a broad range of topics. The challenging part is to watch out for hallucinations, which are basically inaccuracies that the text generates due to some inherent limitations as of now. The other limitation is it can have difficulty in highly technical or scientific content where much of the information can be embedded in figures, equations or tables. |

| Business Analytics: The LLM performs analytical functions previously the domain of specific analytical systems or human analysts. |

-Sentiment analysis-Analyzing data trends, generating reports, and providing market insights-Extract meaningful insights from large datasets and provide data |

Reasonably effective. Sentiment analysis is available now. The other use cases are emerging rapidly. As of this writing GPT 4 through ChatGPT is attempting to do it but the parts are not all hooked up to make it successful. |

Terminology

| Term | Definition |

| Zero Shot Inference | Zero-shot inference is a machine learning technique that allows a model to perform a task without any training data for that specific task. In other words, you as the user do not have to provide any training data for the model to be of immediate use to you. |

| Inferencing | In machine learning, inference is the process of using a trained model to make predictions on new data. It’s the final step in the machine learning workflow, where the model is put to work to generate useful insights or take action. In the case of LLMs, the task of generating a completion in response to a prompt is inference. |

Prompt Design Overview

Prompt design is the process of crafting effective prompts for large language models to elicit the desired response. It involves understanding the capabilities and limitations of the model, identifying the specific task or goal, and formulating prompts that clearly communicate the desired outcome. For simple use cases the prompts are easy to compose. It is as easy as writing your needs in the form of a question. When the LLM responds, you can then have a follow-on request for modifying or clarifying the response until you feel comfortable with the generated text. In the next section we will present a few simple prompts.

Crafting Prompts for Each Use Case

Content Generation

Example Prompt: “Write an engaging blog post about the benefits of meditation for stress relief, including scientific research and practical tips, in a conversational tone.“

Learning Assistance

Example Prompt: “Explain the principles of Newton’s laws of motion in a simple and understandable way for high school students, including real-life examples.“

Technical Assistance

Example Prompt: “I’m having trouble with a JavaScript function that’s supposed to sort an array of objects by date. Here’s the code. What’s going wrong and how can I fix it?“

Business Analytics

Example Prompt: “Analyze the recent trends in online retail consumer behavior during the holiday season, focusing on shopping patterns, preferred platforms, and product types. Summarize the findings in a report with actionable insights for a medium-sized retailer.“

Customer Support

Example Prompt: “A customer is inquiring about the best type of running shoes for long-distance marathons. Provide a detailed response, considering factors like foot arch, running terrain, and shoe material.“

Personal Assistance

Example Prompt: “Organize a week-long itinerary for a business trip to New York, including meetings, networking events, and accommodations, ensuring there’s time set aside each day for meals and rest.”

Prompt Design Pattern

In the prior section, we discussed a few types of simple prompts. In this section, we discuss how to structure your prompt so the LLM can give you a more structured answer as per your requirement. The structuring of prompts happens in two broad areas: Part 1. Describe the role played by the LLM and the task you want it to perform and Part 2. You describe the formatting of the output. Conceptually it is like telling the LLM, “here is your role and the task I want you to do and here is how you will structure the text you generate for me”.

Before we show you a full example of a structured prompt, let us discuss various section keywords and what they mean. The keyword will appear in the final prompt exactly as shown in this table. Let us say at your work you want help from an LLM in writing an effective blog post. We break this problem down into the two parts as described earlier.

Part 1. Describe the role played by the LLM and the task you want it to perform

| Section Keyword | Description | Examples (only one is to be selected) |

| You: | The name of the role you (the user) would like the LLM to assume | “Blog Author” “Email Author” “Social Media Post Author” |

| Your task: | The task the role is to perform | “Draft a blog article” “Draft an email” “Draft a facebook post” |

| Me: | (optional)Description of you, the user | “Marketing manager” “Corporate employee” “Social media manager” |

| Audience: | The intended consumer or reader of the generated content | “General public” “A corporate buyer” |

| Tone: | The tone that is appropriate for the audience | “Friendly” “Conversational” “Professional”“Strict” |

| Language: | The language to write the article in e.g. English or French | “English” “French” |

Part 2. You describe the formatting of the output.

Here you start describing the layout of the blog article itself.

| Section Keyword | Description | Example |

| Title: | Since this is a blog article, we want to provide a title of our own. You can also ask the LLM to suggest a title. |

“Meditation for stress relief” |

| Topics to cover: | Describe the overall topics you want your blog article to cover. Be as specific as possible. | “Benefits of meditation for stress relief. Scientific research supporting this hypothesis. Practical tips on how to apply best practices.” |

| Layout: | Provide section by section or chapter by chapter breakdown. Provide it in the order you would like to appear in the response. | “Title Body Conclusion Summary Keyword list Important links” |

| Output Settings: | Describe size and format requirements here | “Not to exceed 200 words Use right tables Use images” |

Here is the revised Prompt and the Completion from the LLM. For this demonstration, I am using ChatGPT which uses GPT4. The exact same prompt will also work with Claude and Bard although the completion text will be different.

| Input from the user (i.e. Prompt) | Response from ChatGPT system (i.e. Completion) |

| You: Blog Author Your task: Draft a blog article Me: Jenn Jacobs Audience: College Students Tone: Friendly yet professional Language: English Title: Blog Author to provide the title Topics to cover: The blog article has to cover all of these key aspects: Benefits of meditation for stress relief Scientific research supporting this hypothesis Practical tips on how to apply best practices Layout: The blog article follows the following template for layout. Blog Article Title Body text Conclusion Summary Keyword list Important links Output Settings: Not to exceed 250 words. Output should be formatted using text and html tables where applicable. |

Blog Article |

Terminology

| Term | Definition |

| Simple prompt | A simple prompt is a brief instruction or question that provides some context for a machine learning model. It’s typically used to guide the model’s output in a specific direction or to provide additional information that may be helpful for the task at hand. Example in Text generation: “Write a poem about a robot falling in love with a human.“ |

| Structured prompt | A structured prompt is a more complex and detailed type of prompt that provides a more organized and consistent way to communicate with a machine learning model. It typically consists of multiple components, each of which serves a specific purpose in guiding the model’s output. Structured prompts can be used to achieve a wider range of goals than simple prompts, and they can be particularly helpful for tasks that require a high degree of accuracy or precision. Here is a very structured Prompt: { “task”: “text_generation”, “style”: “poem”, “topic”: “nature”, “length”: 4 lines} |

Advanced Techniques for Prompt Design

There are various advanced techniques in prompt design. We will discuss one such technique called Prompt Chaining in more detail below.

Prompt Chaining

Prompt chaining in the context of text generation refers to a method where the output of one prompt is used as the input for the next, creating a sequence of interconnected prompts and responses. This technique is often used to guide a conversation or text generation process towards a specific goal or to explore a topic in depth. Here are a few examples:

Note: Only the text mentioned in double quotes need to be entered. The rest of the text is provided for explanation purposes only.

Example 1: Story Development

The process works like this. You enter the Initial Prompt first. Then, wait for a response. After that, you enter the next prompt and wait for a response and so on.

- Initial Prompt: “Write the beginning of a very short story about a lost treasure.“

- Second Prompt: [Uses the ending of the first story segment] “Continue the story, focusing on the main character deciphering an ancient map.“

- Third Prompt: [Uses the ending of the second story segment] “Now, describe the main character’s journey to the location marked on the map.“

| You Enter (one row at a time) | Bard Responds |

| Write the beginning of a very short story about a lost treasure. | In the heart of the untamed Amazon rainforest, where the emerald canopy stretched endlessly towards the heavens, whispered tales of a lost treasure had echoed through generations. Locals spoke of a hidden trove of immeasurable wealth, buried deep within the labyrinthine jungle, guarded by ancient spirits and forgotten traps. The treasure, they claimed, was the legacy of a long-vanished civilization, a testament to their ingenuity and craftsmanship.For centuries, adventurers from far and wide had ventured into the depths of the rainforest, lured by the siren call of riches beyond their wildest dreams. Yet, the treasure remained elusive, its whereabouts shrouded in mystery, its existence a tantalizing enigma. The jungle itself seemed to conspire against those who sought its secrets, its dense foliage concealing hidden paths and its treacherous terrain testing the limits of human endurance. |

| Continue the story, focusing on the main character deciphering an ancient map. Keep it brief. | Among the latest to brave the rainforest’s perils was a young archaeologist named Dr. Amelia Grant, driven by an insatiable curiosity for the past and a yearning to unravel the mysteries that lay hidden beneath the forest’s emerald embrace. Amelia had spent years poring over ancient texts and artifacts, meticulously piecing together the fragments of knowledge that hinted at the existence of the lost treasure. Her relentless pursuit had led her to a weathered parchment map, its edges frayed and its symbols faded, yet still whispering tales of a forgotten world.Amelia spent countless hours deciphering the map’s cryptic symbols, her mind a whirlwind of theories and possibilities. She traced the intricate lines that outlined rivers, mountains, and landmarks, searching for clues that would guide her towards the hidden treasure. The map seemed to pulse with an otherworldly energy, its symbols dancing before her eyes as if beckoning her deeper into the jungle’s embrace. |

| Now, describe the main character’s journey to the location marked on the map. Keep it brief. | Amelia assembled a team of experienced explorers and porters, their hearts filled with a mix of trepidation and excitement. They ventured deep into the rainforest’s heart, their path winding through dense foliage, past towering trees and cascading waterfalls. The air hummed with the symphony of the jungle, a chorus of insects, birds, and unseen creatures. Amelia studied the map intently, her eyes scanning the landscape for any sign of the landmarks depicted on the parchment.Days turned into weeks as the team traversed the treacherous terrain, their supplies dwindling and their bodies weary. Yet, Amelia’s determination remained unwavering, her spirit buoyed by the anticipation of the treasure that lay ahead. She pressed on, her eyes fixed on the map, her mind consumed by the puzzle it presented.Finally, after weeks of relentless pursuit, Amelia and her team stumbled upon a hidden clearing, bathed in an ethereal glow. At its center stood an ancient ruin, its stone walls adorned with intricate carvings that echoed the symbols on the map. Amelia’s heart pounded with anticipation as she realized that she had reached her destination. The lost treasure, if it indeed existed, was within their grasp. |

Example 2: Technical Explanation

- Initial Prompt: “Explain quantum computing in simple terms.“

- Second Prompt: [Uses information from the first explanation] “Can you now give an example of a problem that quantum computing could solve more efficiently than classical computing?“

- Third Prompt: [Based on the second response] “How does quantum entanglement contribute to this efficiency?“

We leave it up to you the reader to try out the above prompts. You can use ChatGPT, Bard, Bing Chat or Claude for any of these use cases.

Example 3: Educational Dialogue

- Initial Prompt: “What is the process of photosynthesis?“

- Second Prompt: [Based on the first explanation] “Could you elaborate on the role of chlorophyll in photosynthesis?“

- Third Prompt: [Building on the second response] “How does this process differ in plants that live in very low light conditions?“

In each case, the chain of prompts and responses allows for a deeper, more nuanced exploration of the subject matter. This approach is particularly useful in educational contexts, complex narrative development, or any scenario where a topic needs to be unpacked in a step-by-step manner.

Prompt chaining and using a single prompt with a sequence of instructions are two different approaches to guide LLMs in generating text, each with its own characteristics and use cases.

Summarizing Prompt Chaining

- Iterative Process: Prompt chaining involves an iterative process where each new prompt is based on the response to the previous prompt. This allows for adjustments and refinements based on the AI’s output at each step.

- Flexibility: It offers more flexibility to dynamically steer the conversation or text generation based on the evolving context.

- Depth and Exploration: This method is particularly useful for exploring a topic in depth, as it allows the user to delve deeper based on the AI’s responses, asking follow-up questions or seeking clarifications.

- Adaptability: Each step can adapt to unexpected or interesting directions the AI might take, making it ideal for more open-ended or creative tasks.

Comparing Single Prompt with Prompt Chaining

- Predefined Structure: Here, a comprehensive set of instructions or a sequence of questions/points is given in one go. This approach is more structured and less flexible compared to prompt chaining.

- Efficiency: It can be more efficient for straightforward tasks where the desired outcome is clear and does not require iterative refinement.

- Limited Adaptability: The AI follows the sequence of instructions as laid out initially, which might not account for new information or insights that emerge during the text generation process.

- Predictability: This method is more predictable in terms of output, as the AI attempts to address all points in the sequence within its response, following the set order.

In summary, prompt chaining is more dynamic, allowing for adjustments based on AI responses at each step, and is suited for exploratory or complex tasks. In contrast, a single prompt with a sequence of instructions offers a more structured approach, suitable for tasks with a clear, predefined outcome.

Terminology

| Term | Definition |

| Prompt Chaining | Prompt chaining is a technique used in large language models to guide the model towards a more comprehensive and informative response by providing a series of related prompts or questions. Each prompt or question builds upon the previous one, gradually refining the model’s understanding of the task or topic at hand. See the example provided above. |

Conclusion

In conclusion, we discussed how to effectively interact with Large Language Models (LLMs) like PaLM 2 (Bard chatbot) or GPT-4 (ChatGPT), emphasizing the crucial role of well-crafted prompts in leveraging their capabilities. The article explored diverse use cases for LLMs, ranging from content generation and summarization to technical and personal assistance. It highlighted their proficiency in “Zero Shot Inference,” where LLMs excel in tasks they were not explicitly trained for. A significant portion was devoted to explaining various use case categories, including creative content, learning and technical support, customer service, translation, question answering, and business analytics. The importance of precise prompt design was underscored, with detailed examples across different applications. The article concluded with insights on prompt design patterns, stressing the importance of defining role, task, audience, tone, and language. Finally, it distinguished between the effectiveness of prompt chaining versus single, well-structured prompts with sequential instructions, both crucial for guiding LLMs in generating relevant and accurate responses.

Terms to remember

Prompt, Prompt Design, Zero-shot Inferencing, Content Generation, Content Summarization, Question Answering, Sentiment Analysis, Structured Prompt, Simple Prompt, Prompt Chaining

Introduction to Generative AI Series

Part 1 – The Magic of Generative AI: Explained for Everyday Innovators

Part 2 (this article) – Your How-To Guide for Effective Writing with Language Models

Part 3 – Precision Crafted: Mastering the Art of Prompt Engineering for Pinpoint Results

Part 4 – Precision Crafted Prompts: Mastering Incontext Learning

Part 5 – Zero-Shot Learning: Bye-bye to Custom ML Models?