The Magic of Generative AI: Explained for Everyday Innovators

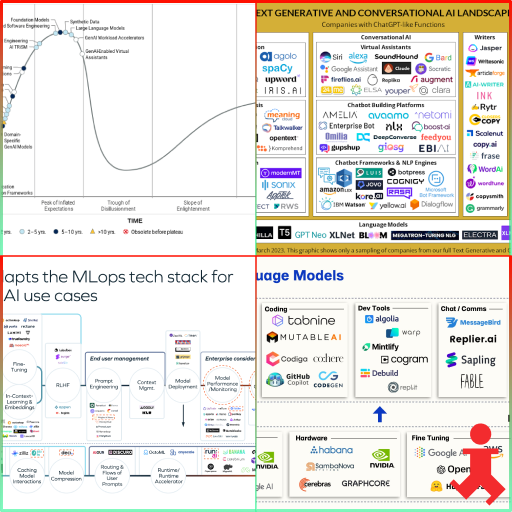

Since 2022, Artificial Intelligence (AI) has captivated a wide audience, particularly with the impactful debut of ChatGPT. In the inaugural installment of our series, ‘Introduction to Generative AI,’ we aim to demystify Generative AI in accessible terms.