Engaging with Large Language Models (LLMs) presents various challenges and opportunities, especially when implementing efficient asynchronous communication with multiple models. In this part of our series, we delve into a practical demonstration of interacting with OpenAI’s GPT-3.5-turbo and GPT-4 models using the llama_index package. We use a simple method called acomplete. This takes a simple string prompt and internally converts

Engaging with Large Language Models (LLMs) presents various challenges and opportunities, especially when implementing different models in tandem to compare their outputs. In this part of our series, we delve into a Python script designed to utilize two different versions of GPT: GPT-3.5 Turbo and GPT-4, using the llama_index package.

The benefit of using multiple models is to compare their capabilities,

Engaging with Large Language Models (LLMs) presents various challenges and opportunities, especially when implementing asynchronous streaming operations. In this part of our series, we delve into a Python model designed to interact with OpenAI’s language models asynchronously, leveraging the streaming capabilities of the LlamaIndex package.

This model enhances application responsiveness and interaction by utilizing asynchronous streaming operations. Asynchronous streaming allows the

Engaging with Large Language Models (LLMs) presents various challenges and opportunities, especially when implementing asynchronous streaming operations. In this part of our series, we delve into handling asynchronous stream API calls within the “chat” functionality of models like ChatGPT. Asynchronous streaming allows the app developer to handle generated text in real-time as it becomes available, rather than waiting for the

Engaging with Large Language Models (LLMs) presents various challenges and opportunities, particularly when dealing with streaming operations. In this part of our series, we explore how to handle streaming API calls within the “chat” functionality of models like ChatGPT. Streaming model allows the app developer to start using the generated text as it is getting generated rather than waiting

Engaging with Large Language Models (LLMs) presents various challenges and opportunities, particularly when dealing with asynchronous operations. In this part of our series, we explore how to handle asynchronous API calls within the “chat” functionality of models like ChatGPT. Asynchronous programming is essential for maintaining responsive applications, especially when integrating LLMs that may require significant processing time to generate responses.

Working with Large Language Models (LLMs) as a developer can be challenging due to their complexity and the broad scope of their capabilities. There are numerous ways to interact with them, each with its own nuances and potential pitfalls. In this article, we demonstrate code that implements the “chat” functionality, which is fundamental to how models like ChatGPT operate. The

LlamaIndex is a highly popular open source library for developers, offering robust tools and abstractions to integrate large language models (LLMs) into software applications efficiently. It provides a unified API, essential text processing tools, and is optimized for performance. The framework supports extensibility and performance optimization, making it ideal for creating advanced features like chatbots, content generation, and data analysis

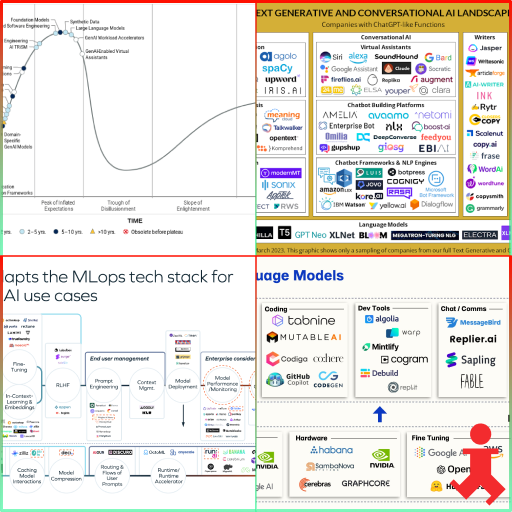

Generative AI is reshaping our world with groundbreaking tech! Dive into this thrilling universe through visually stunning guides that map out key innovators and industry game-changers. Here is our favorite list of 11 such visual treats!

Comprehensive, dynamic database of top Generative AI companies, meticulously selected based on robust criteria, highlighting pioneers and innovators in the field. Slice, dice and save your own copy!

This exciting article reveals the top 10 ways GenAI transforms our daily life, offering everything from crafting dynamic reports to penning polished emails, enhancing grammar, and effortlessly translating languages. Dive in to discover how GenAI can be your ultimate, versatile sidekick, simplifying tasks and supercharging your skills!

In our fifth and final article in this series, we discuss an inherent capability of LLMs to be able to effectively handle previously unseen scenarios.

In our fourth article in this series, we discuss a specific advanced prompt engineering technique called Incontext Learning.

In our third installment, we dive deeper into the world of Prompt Engineering, presenting a comprehensive guide. This field encompasses a variety of techniques, which, when skillfully combined, can significantly improve the precision of responses from Large Language Models (LLMs), advancing beyond basic concepts.

Our focus in this second installment is on illustrating how this transformative technology can be integrated into everyday applications, offering practical insights for its utilization.

Since 2022, Artificial Intelligence (AI) has captivated a wide audience, particularly with the impactful debut of ChatGPT. In the inaugural installment of our series, ‘Introduction to Generative AI,’ we aim to demystify Generative AI in accessible terms.

Join over 1,000+ other people who are mastering AI in 2024

You will be the first to know when we publish new articles

Join over 1,000+ other people who are mastering AI in 2024