LLM programming intro series:

Series

Focus: In a series of articles, we will demonstrate the programming models supported by LllamaIndex. This is meant for technical software architects, developers, LLMOps engineers as well as technical enthusiasts. We provide you with actual working code so you can copy it and use it as you please.

Links

| Link | Purpose |

| https://www.llamaindex.ai/ | Main website |

| https://docs.llamaindex.ai/en/stable/ | Documentation website |

Objective of this code sample

This Python sample demonstrates the use of asynchronous programming to interact with OpenAI, utilizing the llama_index package for GPT-3.5-turbo and GPT-4 models. The script checks for the presence of an OpenAI API key in the environment, initializes the API clients, sends a common prompt to both GPT models asynchronously, and prints the responses along with the elapsed time.

Learning objectives

2. Try out a simple design pattern which you can then adapt to your specific use case.

3. Set you up for more advanced concepts in future articles.

Demo Code

OpenAI class from the llama_index.llms.openai module is used to interact with the GPT models. The time, os, and asyncio modules are standard Python libraries used for time measurement, environment variable access, and asynchronous programming, respectively.

# Note1: make sure to pip install llama_index.core before proceeding

# Note2: make sure your openai api key is set as an env variable as well.

# Import required standard packages

from llama_index.llms.openai import OpenAI

import time

import os

import asyncio

This function checks if the OpenAI API key is present in the environment variables. It prints a message confirming the presence of the key and returns True if found, otherwise, it returns False.

def check_key() -> bool:

# Check for the OpenAI API key in the environment and set it

# Setting in env is the best way to make llama_index not throw an exception

if "OPENAI_API_KEY" in os.environ:

print(f"\nOPENAI_API_KEY detected in env")

return True

else:

return False

The main function begins by checking for the API key using the check_key function. If the key is present, it initializes the OpenAI clients for both GPT-3.5-turbo and GPT-4 models and assigns the API key to each client. If the key is not found, the script exits with an error message.

Then, the script sets a prompt and records the start time. It then creates asynchronous tasks to send the prompt to both GPT-3.5-turbo and GPT-4 models. The responses are awaited and stored in variables. The end time is recorded, and the elapsed time is calculated and formatted. Finally, the responses from both models are printed along with the elapsed time.

async def main():

if check_key():

openai_client_gpt_3_5_turbo = OpenAI(model="gpt-3.5-turbo")

openai_client_gpt_3_5_turbo.api_key = os.environ["OPENAI_API_KEY"]

openai_client_gpt_4 = OpenAI(model="gpt-4")

openai_client_gpt_4.api_key = os.environ["OPENAI_API_KEY"]

else:

print("OPENAI_API_KEY not in env")

exit(1) # Exit if no API key is found

prompt = "You are a helpful AI assistant. Tell me the best day to visit Paris. Then, elaborate."

# Get the current time

start_time = time.time()

# Asynchronously call both GPT-3.5-turbo and GPT-4

response_3_5_task = asyncio.create_task(openai_client_gpt_3_5_turbo.acomplete(prompt=prompt))

response_4_task = asyncio.create_task(openai_client_gpt_4.acomplete(prompt=prompt))

# Wait for both responses

response_3_5 = await response_3_5_task

response_4 = await response_4_task

After processing the responses, the script calculates and prints the elapsed time.

# Get the end time

end_time = time.time()

# Calculate the elapsed time in seconds

elapsed_time = end_time - start_time

# Format the elapsed time to two decimal places

formatted_time = "{:.2f}".format(elapsed_time)

# Print the responses with labels

print("Response from GPT-3.5-turbo:")

print(response_3_5)

print("\nResponse from GPT-4:")

print(response_4)

# Print the formatted time

print(f"Elapsed time: {formatted_time} seconds")

if __name__ == "__main__":

asyncio.run(main())

Sample Output

PARTIAL OUTPUT SHOWN HERE FOR DEMO PURPOSES...

# OPENAI_API_KEY detected in env

# Response from GPT-3.5-turbo:

# The best day to visit Paris is subjective and can vary depending on personal preferences.

# However, many people consider the spring months of April and May to be the ideal time to

# visit Paris. During this time, the weather is mild and pleasant, with blooming flowers and

# trees adding to the city's charm. Additionally, the tourist crowds are not as overwhelming

# as during the peak summer months, allowing for a more enjoyable and relaxed experience.

# <truncated for brevity>

# Elapsed time: 13.22 seconds

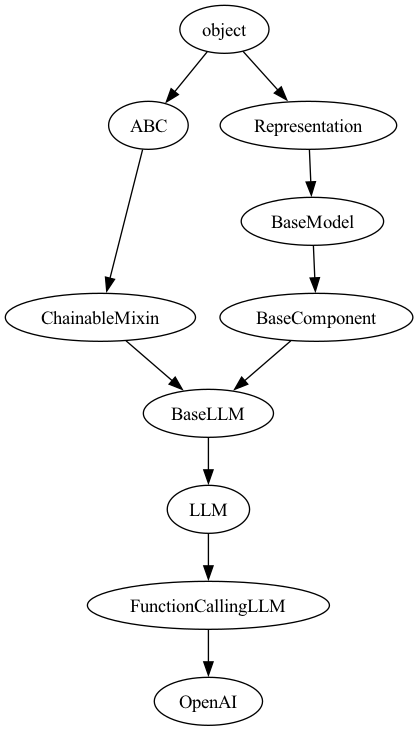

Class hierarchy of OpenAI Class