Large Language Models (LLMs) are comparable to a highly talented coworker or relative, adept at a wide range of tasks. However, similar to how even the most capable individuals require clear objectives and directives, LLMs need precise instructions to function optimally. These instructions, sometimes detailed and rule-specific, are well-documented with new techniques continually invented by AI researchers.

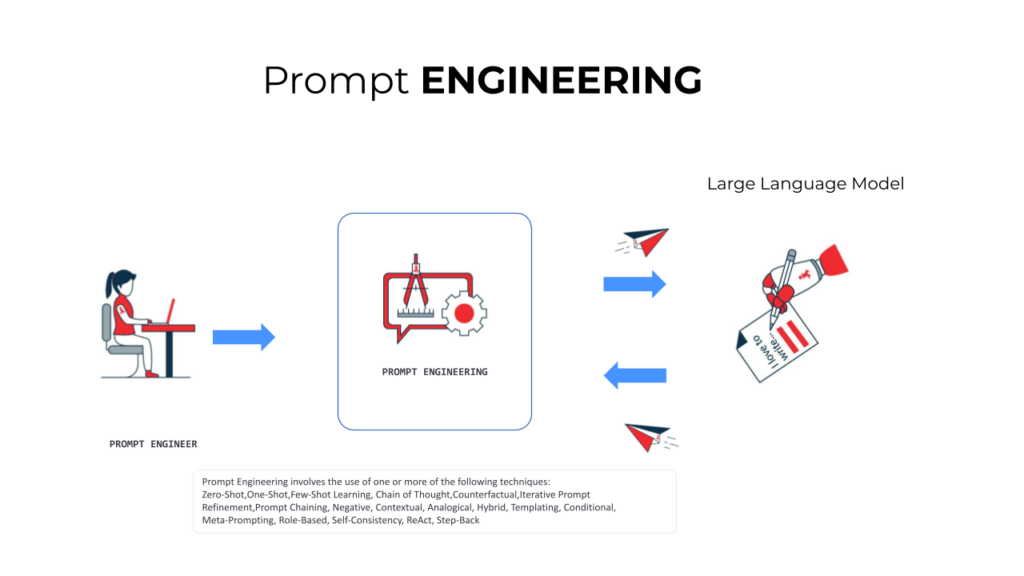

Prompt Engineering encompasses various strategies used by engineers to produce sophisticated outputs. It’s akin to the difference between interior decorating, which is like arranging furniture in a room, and the more complex work of the architects and structural engineers who design and engineer entire buildings. It involves more systematic and strategic approaches to interacting with AI models, especially when dealing with complex tasks, refining model outputs, or exploring advanced functionalities.

Comparing Prompt Design and Engineering

Prompt design and prompt engineering are both related to the way we interact with LLM systems. They focus on different aspects of this interaction. However, it is noteworthy that there is no clear line demarcating where prompt design ends and prompt engineering begins.

Prompt Design

Focus: Prompt design primarily concerns the crafting of individual prompts to effectively communicate a task or query to an AI model. It emphasizes the content and formulation of a single prompt.

Creativity and Clarity: It involves being creative and clear in how a question or task is posed to ensure the LLM understands and responds appropriately. This can include the use of specific wording, context setting, and guiding the LLM towards the desired type of response.

Where applicable: Useful in everyday interactions with LLM, like composing a question for a language model or designing a query for an information retrieval system.

Prompt Engineering

Scope: Prompt engineering is a broader and more systematic approach. It encompasses not just the design of individual prompts but also strategies for interacting with AI models over multiple interactions or for achieving more complex goals.

Techniques and Strategies: This might include strategies like prompt chaining, using templated responses, understanding how different model parameters affect responses, and systematically testing and refining prompts to optimize performance.

Where applicable: Often used in professional or research settings where the goal is to maximize the efficiency, accuracy, or reliability of LLM systems in complex tasks.

In summary, prompt design is about crafting individual prompts effectively, focusing on clarity and creativity in communication. Prompt engineering is a more comprehensive approach that involves developing strategies and methodologies for optimizing interactions with LLM systems across a range of tasks and objectives.

Overview of Most Popular Prompt Engineering Techniques

Prompt engineering, especially in the context of LLM models like GPT-4, involves crafting and refining the input prompt to guide the model towards generating a desired type of output. These techniques can be used individually or in combination, depending on the complexity of the task and the specific goals of the prompt engineering process. The effectiveness of these techniques can vary based on the capabilities and limitations of the LLM being used.

Prompt engineering is a rapidly evolving field, and new techniques are being developed all the time. However, some of the most common and effective techniques that are used in prompt engineering include:

Zero-Shot Learning: Providing a task to the model without any prior examples or context. The prompt is structured to clearly define the task or question.

One-Shot Learning: Including a single example in the prompt to guide the LLM in understanding the desired format or type of response.

These techniques allow LLMs to perform tasks without any prior training on the specific task or data. Zero-shot prompting requires no training examples, while few-shot prompting only requires a small number of examples. This makes them well-suited for tasks where there is limited or no labeled data available.

Few-Shot Learning: Providing a few examples in the prompt to establish a pattern or format for the LLM to follow. This is especially useful for more complex tasks.

It involves providing several examples within a prompt to teach the LLM a new format or style of response, such as showing it examples of jokes, poems, or a specific writing style before requesting it to generate something similar.

Chain of Thought Prompting: Writing prompts that include a step-by-step reasoning process, encouraging the LLM to follow a similar structured approach in its response.

This technique breaks down complex tasks into a series of smaller, more manageable steps. This helps the LLM to reason about the task and generate more accurate and consistent results.

It involves crafting prompts that encourage the LLM to “think out loud” or explain its reasoning process step by step, useful in problem-solving or educational contexts.

Counterfactual Prompting: Asking the LLM to consider alternative scenarios or outcomes, such as “What if the industrial revolution never happened?” to explore complex historical or hypothetical questions.

Iterative Prompt Refinement: Starting with a basic prompt and iteratively refining it based on the LLM’s responses to achieve more accurate or relevant outputs.

It involves using a series of prompts where each subsequent prompt refines or corrects the LLM’s previous response, guiding it towards a more accurate or relevant answer.

Negative Prompting: Including instructions about what not to do or include in the response, which can help in avoiding undesirable outputs.

Prompt Chaining: Linking multiple prompts together to guide the LLM through a series of related tasks or thought processes.

Contextual Prompting: Providing background information or context to help the LLM understand the scenario or subject matter better.

It involves embedding a set of instructions or context within a prompt to influence the LLM’s response, such as providing a brief backstory or setting parameters for a creative writing task.

Analogical Prompting: Using analogies or comparisons to familiar concepts to guide the LLM’s understanding of a less familiar topic.

Hybrid Prompting: Combining elements of different techniques (like zero-shot and few-shot) to create a more effective prompt.

Prompt Templating: Creating a template for prompts that can be reused across similar tasks, with only minor modifications needed for each specific case.

It involves creating a set of standardized prompts that can be reused for similar tasks or queries, ensuring consistency and efficiency in the LLM’s responses.

Conditional Prompting: Designing prompts that include conditions or qualifiers to guide the LLM’s response in a specific direction.

Meta-Prompting: Crafting prompts that instruct the LLM on how to approach prompt construction or refinement itself.

It involves asking the LLM to generate its own prompts based on a given topic or goal, effectively using the LLM to aid in the prompt engineering process itself.

This technique uses a single universal prompt to train an LLM to perform a variety of tasks. This can be helpful for developing general-purpose LLMs that can be used for a wide range of applications.

Role-Based Prompting: Assigning a specific role or persona to the LLM in the prompt, which can influence the style and substance of its responses.

Self-Consistency: This technique ensures that the LLM’s output is consistent with its previous statements and with the overall context of the task. This helps to prevent the LLM from generating nonsensical or contradictory output.

ReAct: This technique combines reasoning and acting to enable LLMs to perform tasks that require both planning and execution. This is particularly useful for tasks that involve interaction with the environment.

Step-Back Prompting: This technique helps LLMs to perform abstractions and derive high-level concepts from low-level details. This is useful for tasks that require the LLM to understand the underlying principles of a problem.

Analogical Prompting: This technique uses analogies to help LLMs understand new concepts or problems. This can be helpful for tasks that are difficult to explain in a straightforward way.

Least to Most Prompting: This technique uses a progressive sequence of prompts to guide the LLM to a final conclusion. This can be helpful for tasks that require the LLM to reason about complex information.

Self-Ask Prompting: This technique encourages the LLM to ask itself questions in order to better understand the task at hand. This can be helpful for tasks that require the LLM to think critically and solve problems on its own.

Multi-step Task Completion: Designing a series of prompts where each response is used as a stepping stone to complete a larger task, such as breaking down a complex research question into smaller, manageable parts.

Bias Mitigation Strategies: Implementing prompts that are specifically designed to minimize biases in LLM responses, for example, by avoiding leading questions or framing prompts in a neutral, balanced manner.

Each of these examples involves a strategic approach to interacting with LLM models, often with the goal of enhancing accuracy, creativity, efficiency, or depth in the LLM’s responses. They move beyond simply crafting a single, effective prompt (prompt design) to employing a more comprehensive methodology for engaging with LLM systems.

Next Steps

Follow-on articles in this series describe in detail how to apply these prompt engineering techniques in real-world use cases. In many practical use cases, we will be using a combination of these techniques to achieve the results we desire. If the results are not up to the expectations, then we have to graduate to other strategies, specifically, full fine tuning or partial fine tuning or even building a custom LLM that is purpose built.

Here is an example interaction involving advanced Prompt Engineering techniques: Precision Crafted Prompts…

Terminology

| Term | Definition |

| Prompt design | Prompt design is about creating individual prompts to effectively communicate tasks or queries to an AI model. It requires creativity and clarity in formulating questions or tasks, often involving specific wording and context setting to guide the AI towards the desired response. This technique is particularly valuable in daily interactions with language models and in designing queries for information retrieval systems. |

| Prompt engineering | Prompt engineering is an extensive and systematic approach involving the design of individual prompts and strategies for interacting with AI models across multiple interactions to achieve complex goals. It includes techniques like prompt chaining, templated responses, understanding the impact of model parameters on responses, and systematically refining prompts for optimal performance. This approach is commonly used in professional and research settings to enhance the efficiency, accuracy, and reliability of Large Language Model (LLM) systems in complex tasks. |

Summary

In this article we explored in detail the intricate field of Prompt Engineering particularly for Large Language Models (LLMs). Prompt Engineering, as contrasted to Prompt Design, is broader and more strategic, encompassing a range of techniques that help you achieve complex use cases utilizing generative AI.

Key techniques in prompt engineering include Zero-Shot, One-Shot, and Few-Shot Learning, which require no or minimal examples for the AI to perform tasks. Other methods like Chain of Thought Prompting, Counterfactual Prompting, and Iterative Prompt Refinement involve specific strategies to guide the AI’s reasoning and output. Advanced strategies also include Negative Prompting, Contextual Prompting, Analogical Prompting, and Hybrid Prompting, each serving different purposes like avoiding undesirable outputs.

The field is rapidly evolving, with new techniques emerging regularly. Prompt engineering is essential in professional, business or research environments where maximizing LLM efficiency and reliability is crucial. Through this series of articles we presented real-world examples and techniques that illustrated how to achieve the right set of AI interactions in order to achieve your desired outcomes. This comprehensive approach helps move you toward a more sophisticated methodology in engaging with Generative AI systems.

Terms to Remember

Prompt Design, Prompt Engineering, Zero-Shot Learning, One-Shot Learning, Few-Shot Learning, Chain of Thought Prompting, Counterfactual Prompting, Iterative Prompt Refinement, Negative Prompting, Prompt Chaining, Contextual Prompting, Analogical Prompting, Hybrid Prompting, Prompt Templating, Conditional Prompting, Meta-Prompting, Role-Based Prompting, Self-Consistency, ReAct, Step-Back Prompting

Introduction to Generative AI Series

Part 1 – The Magic of Generative AI: Explained for Everyday Innovators

Part 2 – Your How-To Guide for Effective Writing with Language Models

Part 3 (this article) – Precision Crafted: Mastering the Art of Prompt Engineering for Pinpoint Results

Part 4 – Precision Crafted Prompts: Mastering Incontext Learning

Part 5 – Zero-Shot Learning: Bye-bye to Custom ML Models?