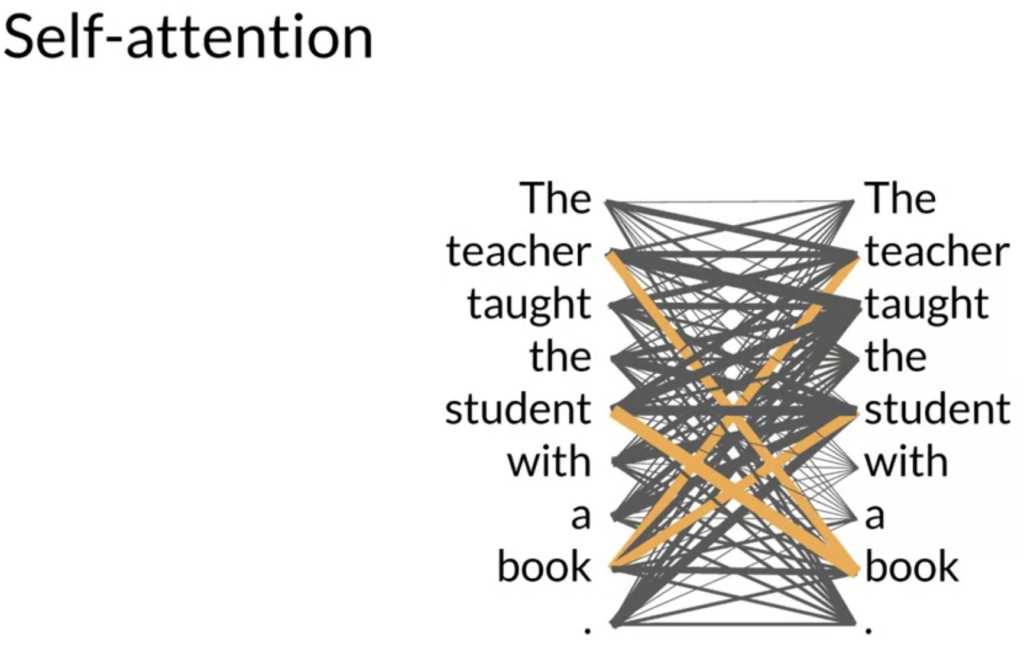

During the training of a Language Model (LLM), attention weights are acquired. These weights represent the connections between words within a sentence used to train the model. These connections are visually represented by lines connecting one word to another, and the thickness of these lines reflects the strength of the connections. Such visual representations are referred to as attention maps, and they serve the purpose of depicting the attention relationships between each word and every other word in the sentence. In this stylized example, it is evident that the word ‘book’ exhibits strong attention towards both the words ‘teacher’ and ‘student’.

See Also: Attention, Attention weights, Self attention