Artificial Intelligence has garnered a substantial following since 2022, owing to its capacity to fulfill a broad spectrum of use cases that were previously deemed unattainable for any software system. One noteworthy example of this trend is OpenAI’s renowned ChatGPT system. By merely posing a question, individuals can now obtain intelligent, human-like responses. While people generally enjoy asking questions, they frequently encounter challenges in locating the right experts who can provide accurate answers. This is where ChatGPT, underpinned by the innovative ‘Generative AI’ technology, abbreviated as ‘GenAI,’ has made a significant and well-received entrance onto the scene.

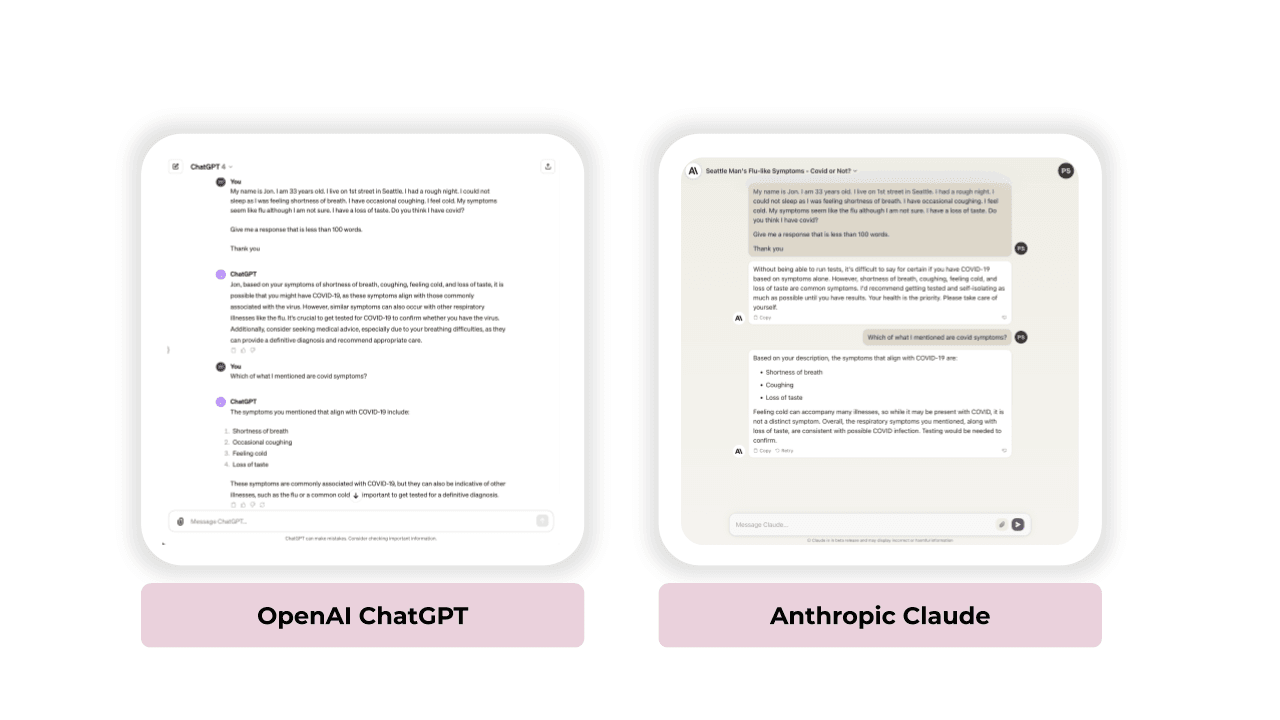

As an illustration, one could present ChatGPT with a set of health symptoms and inquire whether they indicate a COVID-19 infection. The GenAI system had not encountered this particular combination of circumstances before, yet it still managed to deliver a good response.

Note: Sections of text shown in green italics are prompts that can be entered verbatim in a chat application such as ChatGPT, Bard or other.

| Input from the user | Response from GenAI system (ChatGPT) |

| My name is Jon. I am 33 years old. I live on 21st street in Seattle. I had a rough night. I could not sleep as I was feeling shortness of breath. I have occasional coughing. I feel cold. My symptoms seem like the flu although I am not sure. I have a loss of taste. Do you think I have covid? Give me a response that is less than 100 words. Thank you |

I’m not a doctor, but the symptoms you’ve described can be associated with COVID-19, though they are not conclusive on their own. It’s important to consult with a healthcare provider for a proper diagnosis. They may recommend a COVID-19 test and advise on the next steps. Meanwhile, it’s wise to stay isolated to prevent potential spread of illness and rest as much as possible. Please seek medical attention promptly. |

Four Most Popular Chat Systems Built With Different Large Language Models

In addition to ChatGPT, there are three other highly capable chat systems that perform at a similar level. They are illustrated below.

Figure 1: OpenAI ChatGPT and Anthropic Claude

Figure 2: Microsoft Bing Chat and Google Bard

Terminology

Let us now discuss four terms that were illustrated above. These are the most basic of terms we want to be familiar with and using correctly.

| GenAI | GenAI is short for Generative AI. It is a type of AI system that can understand text instructions almost like a well-educated person. When you give it a task through text, it can create not just text, but also images, videos, 3D, and audio. This human-like ability comes from its extensive training on a wide range of high-quality human-generated content, such as books, Wikipedia articles, and specialized texts. This helps it to understand and mimic human styles and patterns in its creations, allowing it to generate outputs that often seem surprisingly natural and creative. |

| Model | The GenAI system centers on a core software component known as the “model,” often referred to as the “foundation model” (FM). This nomenclature draws a parallel between the model’s versatility and a building’s foundation, as it serves as a robust base upon which various types of structures can be constructed. For reference this model is also frequently referred to by its expanded name: “Large Language Model” (LLM). |

| Prompt | The text “input” provided to the GenAI system is referred to as a Prompt.The concept of a Prompt is an instructional tool frequently employed by language arts educators to guide their students in selecting the type of essay to write. Consequently, it represents a valuable adaptation for GenAI. |

| Completion | The GenAI system’s “response” is referred to as a Completion because it is specifically engineered to finish the text input it receives. When you supply a prompt or a fragment of a sentence, the AI system analyzes this input and produces text to bring the thought to a conclusion, address the query, or extend the conversation—akin to finishing a sentence or an idea. |

Let us say the user now has a follow on question like below.

| Input from the user | Response from GenAI system |

| <Previous question, repeated here for illustration> My name is Jon. I am 33 years old. I live on 1st street in Seattle. I had a rough night. I could not sleep as I was feeling shortness of breath. I have occasional coughing. I feel cold. My symptoms seem like the flu although I am not sure. I have a loss of taste. Do you think I have covid?Give me a response that is less than 100 words. Thank you |

<Previous response, repeated here for illustration> I’m not a doctor, but the symptoms you’ve described can be associated with COVID-19, though they are not conclusive on their own. It’s important to consult with a healthcare provider for a proper diagnosis. They may recommend a COVID-19 test and advise on the next steps. Meanwhile, it’s wise to stay isolated to prevent potential spread of illness and rest as much as possible. Please seek medical attention promptly. |

| <follow on question> Which of what I mentioned are covid symptoms? |

<Response to follow on question> The symptoms you mentioned that align with common COVID-19 symptoms include:Shortness of breathOccasional coughingFeeling coldLoss of tasteThese symptoms are also common in other illnesses like the flu. It’s important to get tested for COVID-19 to confirm whether these symptoms are due to COVID-19 or another illness. |

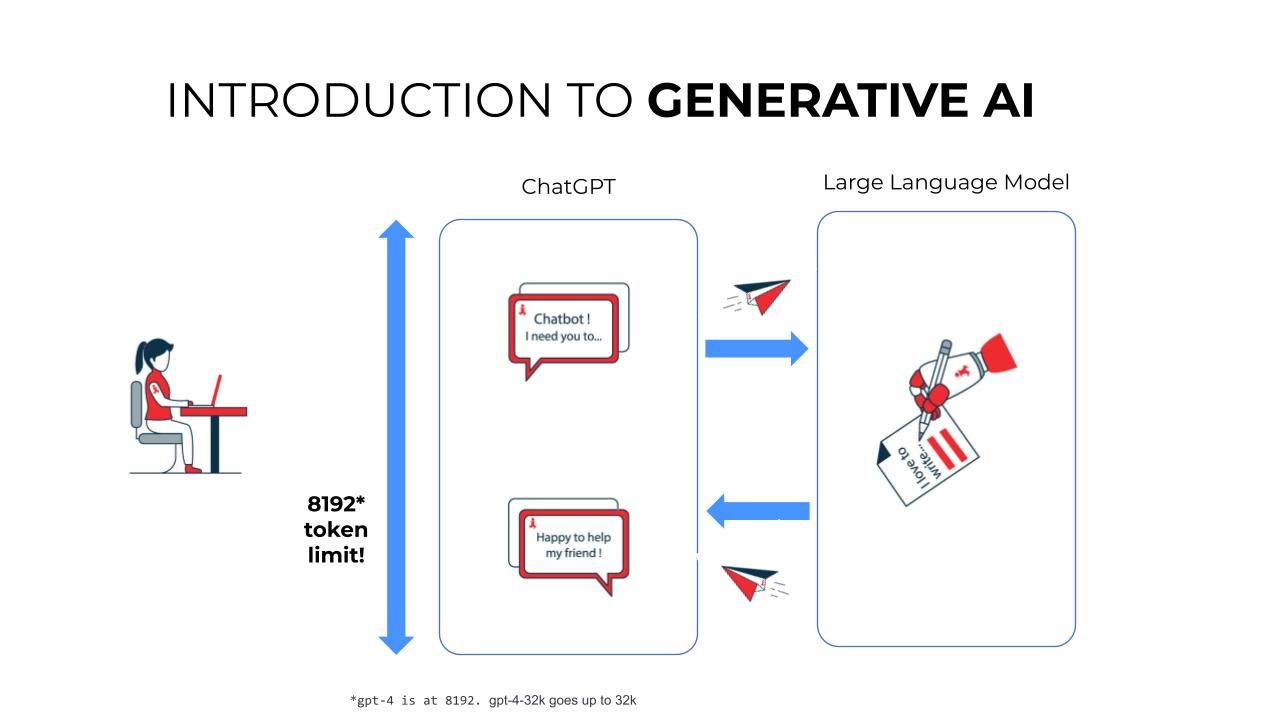

To tackle this question, the GenAI system must have access to the preceding context, which includes all previous prompts and responses. To this historical context, we introduce a new query and its expected response.

This amalgamation of past prompts and responses, along with the new query and anticipated response, is denoted as the “context” window. Its size remains a fixed value, albeit varying from one model to another.

Terminology

| Token | In the world of GenAI, a ‘token’ serves as the fundamental unit of text that the model processes. All text-based GenAI models have undergone training on billions of words of text, meticulously designed to effectively assimilate the meaning of these sentences and word-packed paragraphs.Given that words can exhibit complex conjugations of their constituent parts, the system dissects these words into subparts, each referred to as a ‘token.’ This approach enables the model to comprehensively grasp the underlying depth of word meanings. For instance, the word ‘unbelievable’ might be tokenized into three tokens: ‘un,’ ‘believ,’ and ‘able,’ with each token contributing nuanced significance to the model’s understanding. |

| Context | During any interaction with the model, we provide it with prior history, encompassing previous prompts and their corresponding responses, along with a new follow-up prompt. Subsequently, the system generates a fresh response. The combined content of the prior history, the new prompt, and the resulting response must adhere to a predetermined limit of tokens.For instance, GPT-4 is capable of processing a maximum of 8,192 tokens during any given session. This total includes all elements: the user’s current input, the potential response to that input, as well as the history of prior prompts and their corresponding responses. Should the total token count surpass the designated threshold, GPT will automatically discard the oldest history. |

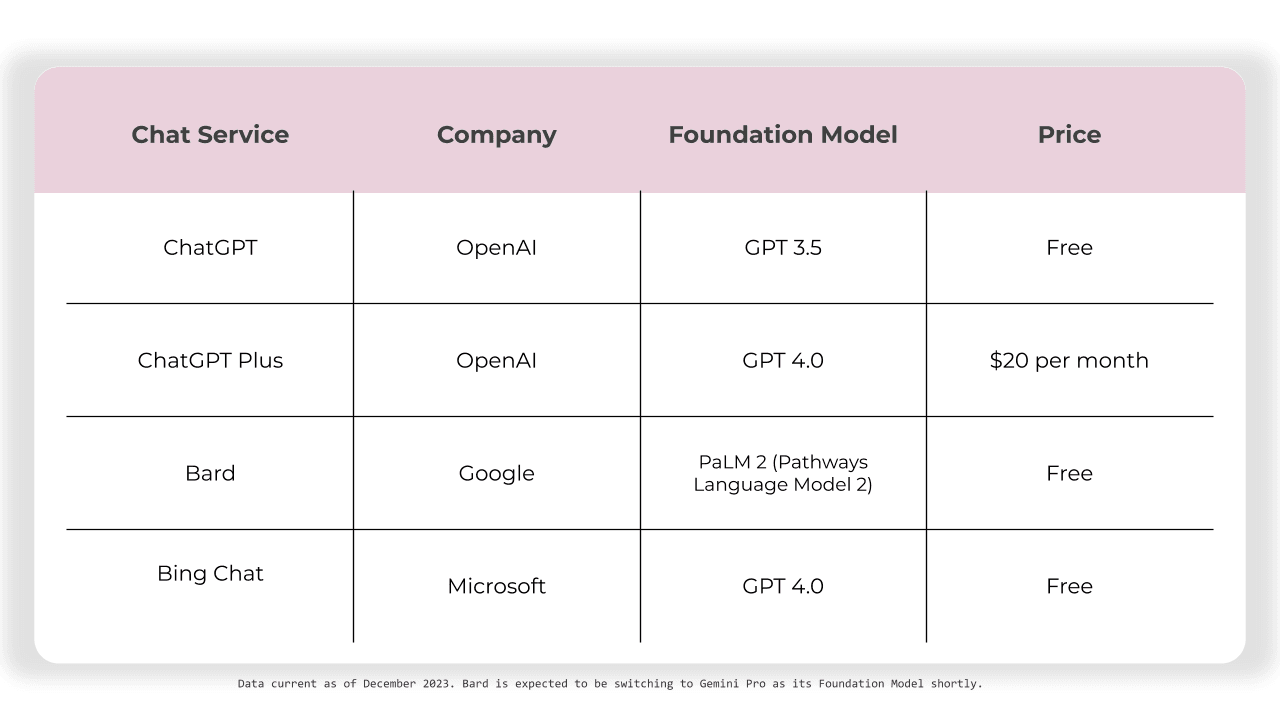

The ChatGPT system is constructed upon a software framework known as the “foundation model,” frequently referred to as the “large language model.” The chat interface, where you input text, receive responses, and engage in ongoing conversations, is distinct from the underlying model. As of the time of this writing, the ChatGPT chatbot interface offers access to several models, some of which are available for free, while others require a monthly subscription fee. These models include GPT-4 and GPT-3.5.

Figure 3: The input Prompt, Large Language Model and the output Completion

Here is a comparison of four different chat systems including the names of the underlying LLM.

Figure: Comparing 4 different chat services

Terminology

| Foundation Model | A foundation model, essentially a massive AI software service, is pre-trained on an extensive dataset, particularly textual data in the context of this article. It is engineered for adaptability, allowing it to be easily configured for various downstream tasks, including sentiment analysis, image captioning, and object recognition. These foundation models have the potential to reshape numerous industries, spanning healthcare, finance, and customer service, enabling applications such as fraud detection and personalized customer support. |

| Types of Models | Text-to-text – Prompt is to be supplied in text format. The output of the model is also text. Text-to-image – Prompt is to be supplied in text format. The output of the model is a generated image. Text-to-video – Prompt is to be supplied in text format. The output of the model is a generated video. Text-to-3D – Prompt is to be supplied in text format. The output of the model is a generated 3D model. Text-to-task – Prompt is to be supplied in text format. The output of the model is to perform a task. |

Common tasks done by Text-to-Text models

- Generation: In LLMs, ‘generation’ refers to the production of text based on a given prompt or context. The model generates text by predicting the next word or sequence of words that should logically follow the input. This can be used for creating content, answering questions, writing essays, etc.

- Classification: This is the process of categorizing text into predefined classes or groups. An LLM can be used to determine the sentiment of a sentence (positive, negative, neutral), classify the type of question (factual, opinion-based), or identify the genre of a text, among other tasks.

- Summarization: Summarization is the task of condensing a larger text into a shorter version, preserving the key information and overall message. LLMs can perform summarization by understanding the main points in a text and rewriting them in a concise manner.

- Translation: Translation with LLMs involves converting text from one language to another while maintaining the original meaning. These models can handle multiple languages and are trained on vast datasets, allowing them to provide accurate and contextually relevant translations.

- Search: In the context of LLMs, ‘search’ refers to finding relevant information or answers from a large corpus of text. The model can analyze queries, understand the context, and retrieve or generate responses that are most relevant to the search intent.

- Research: This involves using LLMs to gather, analyze, and synthesize information on a given topic. The model can help in consolidating information from various sources, providing summaries, and even suggesting new angles or areas for exploration based on the existing data.

- Extraction: Extraction is about pulling specific information or data points from a larger text. For example, an LLM can extract dates, names, places, facts, or other specific details from documents, making it useful for tasks like data analysis or information retrieval.

- Clustering: This refers to the grouping of similar texts or information. While not a primary function of LLMs, they can aid in clustering by analyzing text features and similarities, which can be useful in organizing large datasets or understanding patterns in data.

- Content Editing / Rewriting: LLMs can be used to edit or rewrite text, improving grammar, style, clarity, or even changing the tone of the content. They can suggest alternative phrasings, restructure sentences, and ensure the text adheres to certain stylistic or grammatical standards.

Summary

In 2023, a significant milestone in human-like capability to provide intelligent answers to questions was achieved with the introduction of ChatGPT by OpenAI and Bard by Google. These chatbot systems are powered by a sophisticated underlying software architecture known as a foundation model, enabling them to generate text-based responses to user inquiries. As a result, they are collectively referred to as Generative AI systems, or GenAI for short.

Users of GenAI systems, in general, and the foundation model, in particular, provide a starting question to initiate the conversation, known as a ‘prompt.’ The prompt elicits a response from the system, referred to as a ‘completion.’ Subsequent questions are posed in the context of prior exchanges, forming a continuous thread of questions and responses. The cumulative information from the conversation, including the current prompt and response, constitutes the ‘context.’ Each GenAI model has a specific limit on the size of this context; for instance, GPT-4 has a fixed limit of 8,192 tokens.

Numerous tech companies offer commercially available foundation models, with the most prominent ones including the GPT family from OpenAI (such as GPT-3 and GPT-4), PALM from Google, LLaMA from Meta, and Claude 2 from Anthropic. Among these, the three most widely recognized chatbots leveraging a foundation model are OpenAI’s ChatGPT, Microsoft’s Bing Chat, and Google’s Bard.

Terms to remember: Model, Foundation Model, Large Language Model, Prompt, Completion, Context

Links:Bard by Google: https://bard.google.com

Bing Chat by Microsoft: https://chat.bing.com

ChatGPT by OpenAI: https://chat.openai.com

Claude Chat by Anthropic: https://claude.ai

Introduction to Generative AI Series

Part 1 (this article) – The Magic of Generative AI: Explained for Everyday Innovators

Part 2 – Your How-To Guide for Effective Writing with Language Models

Part 3 – Precision Crafted: Mastering the Art of Prompt Engineering for Pinpoint Results

Part 4 – Precision Crafted Prompts: Mastering Incontext Learning

Part 5 – Zero-Shot Learning: Bye-bye to Custom ML Models?